15.3 Condor Architecture

15.3 Condor Architecture

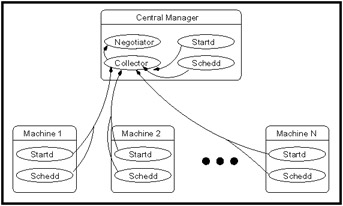

A Condor pool comprises a single machine that serves as the central manager and an arbitrary number of other machines that have joined the pool. Conceptually, the pool is a collection of resources (machines) and resource requests (jobs). The role of Condor is to match waiting requests with available resources. Every part of Condor sends periodic updates to the central manager, the centralized repository of information about the state of the pool. The central manager periodically assesses the current state of the pool and tries to match pending requests with the appropriate resources.

15.3.1 The Condor Daemons

In this subsection we describe all the daemons (background server processes) in Condor and the role each plays in the system.

-

condor_master: This daemon's role is to simplify system administration. It is responsible for keeping the rest of the Condor daemons running on each machine in a pool. The master spawns the other daemons and periodically checks the timestamps on the binaries of the daemons it is managing. If it finds new binaries, the master will restart the affected daemons. This allows Condor to be upgraded easily. In addition, if any other Condor daemon on the machine exits abnormally, the condor_master will send e-mail to the system administrator with information about the problem and then automatically restart the affected daemon. The condor_master also supports various administrative commands to start, stop, or reconfigure daemons remotely. The condor_master runs on every machine in your Condor pool.

-

condor_startd: This daemon represents a machine to the Condor pool. It advertises a machine ClassAd that contains attributes about the machine's capabilities and policies. Running the startd enables a machine to execute jobs. The condor_startd is responsible for enforcing the policy under which remote jobs will be started, suspended, resumed, vacated, or killed. When the startd is ready to execute a Condor job, it spawns the condor_starter, described below.

-

condor_starter: This program is the entity that spawns the remote Condor job on a given machine. It sets up the execution environment and monitors the job once it is running. The starter detects job completion, sends back status information to the submitting machine, and exits.

-

condor_schedd: This daemon represents jobs to the Condor pool. Any machine that allows users to submit jobs needs to have a condor_schedd running. Users submit jobs to the condor_schedd, where they are stored in the job queue. The various tools to view and manipulate the job queue (such as condor_submit, condor_q, or condor_rm) connect to the condor_schedd to do their work.

-

condor_shadow: This program runs on the machine where a job was submitted whenever that job is executing. The shadow serves requests for files to transfer, logs the job's progress, and reports statistics when the job completes. Jobs that are linked for Condor's Standard Universe, which perform remote system calls, do so via the condor_shadow. Any system call performed on the remote execute machine is sent over the network to the condor_shadow. The shadow performs the system call (such as file I/O) on the submit machine and the result is sent back over the network to the remote job.

-

condor_collector: This daemon is responsible for collecting all the information about the status of a Condor pool. All other daemons periodically send ClassAd updates to the collector. These ClassAds contain all the information about the state of the daemons, the resources they represent, or resource requests in the pool (such as jobs that have been submitted to a given condor_schedd). The condor_collector can be thought of as a dynamic database of ClassAds. The condor_status command can be used to query the collector for specific information about various parts of Condor. The Condor daemons also query the collector for important information, such as what address to use for sending commands to a remote machine. The condor_collector runs on the machine designated as the central manager.

-

condor_negotiator: This daemon is responsible for all the matchmaking within the Condor system. The negotiator is also responsible for enforcing user priorities in the system.

15.3.2 The Condor Daemons in Action

Within a given Condor installation, one machine will serve as the pool's central manager. In addition to the condor_master daemon that runs on every machine in a Condor pool, the central manager runs the condor_collector and the condor_negotiator daemons. Any machine in the installation that should be capable of running jobs should run the condor_startd, and any machine that should maintain a job queue and therefore allow users on that machine to submit jobs should run a condor_schedd.

Condor allows any machine simultaneously to execute jobs and serve as a submission point by running both a condor_startd and a condor_schedd. Figure 15.6 displays a Condor pool in which every machine in the pool can both submit and run jobs, including the central manager.

Figure 15.6: Daemon layout of an idle Condor pool.

The interface for adding a job to the Condor system is condor_submit, which reads a job description file, creates a job ClassAd, and gives that ClassAd to the condor_schedd managing the local job queue. This triggers a negotiation cycle. During a negotiation cycle, the condor_negotiator queries the condor_collector to discover all machines that are willing to perform work and all users with idle jobs. The condor_negotiator communicates in user priority order with each condor_schedd that has idle jobs in its queue, and performs matchmaking to match jobs with machines such that both job and machine ClassAd requirements are satisfied and preferences (rank) are honored.

Once the condor_negotiator makes a match, the condor_schedd claims the corresponding machine and is allowed to make subsequent scheduling decisions about the order in which jobs run. This hierarchical, distributed scheduling architecture enhances Condor's scalability and flexibility.

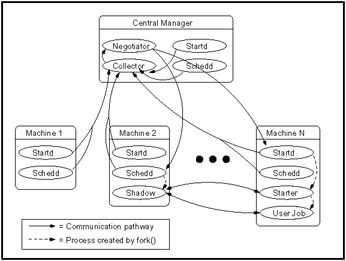

When the condor_schedd starts a job, it spawns a condor_shadow process on the submit machine, and the condor_startd spawns a condor_starter process on the corresponding execute machine (see Figure 15.7). The shadow transfers the job ClassAd and any data files required to the starter, which spawns the user's application.

Figure 15.7: Daemon layout when a job submitted from Machine 2 is running.

If the job is a Standard Universe job, the shadow will begin to service remote system calls originating from the user job, allowing the job to transparently access data files on the submitting host.

When the job completes or is aborted, the condor_starter removes every process spawned by the user job, and frees any temporary scratch disk space used by the job. This ensures that the execute machine is left in a clean state and that resources (such as processes or disk space) are not being leaked.