New Driver, New Functionality

New Driver, New Functionality

(Based on materials and with the permission of http://www.fcenter.ru, a Russian-language Web site.)

Computer hardware components are joined to operate as an integral system using not only standards and interfaces, but also appropriate software. The lead role in this process is delegated to drivers, specialized programs that ensure operation of computer hardware. The functional capabilities of hardware components, including performance, depend on the efficiency of the driver code.

This is especially true for such important components as video adapters, which have numerous drivers. The Nvidia Detonator family serves as a good example of such drivers.

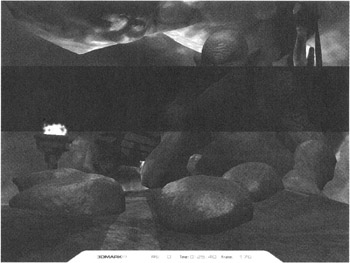

When the 3DMark03 test was released, Detonator 42.86 was the newest version. Later, according to the user reviews, Detonator 42.68 was optimized for the 3DMark03 test. When these drivers are installed, video adapters based on GeForce FX chips show far better results in 3DMark03. However, these drivers do not always work correctly with GeForce4 Titanium products. For example, a wide horizontal line may appear across the screen.

The screenshot in Fig. 13.12 is a good illustration of why you should select and test drivers carefully, even if the developers of those drivers guarantee high performance of the video subsystem. Some experts recommend using the Detonator 42.86 driver with the video adapters based on GeForce4 chips. This recommendation can be found on many popular Web sites dedicated to hardware topics. This driver ensures reliable operation and high performance for the previously mentioned family of video adapters. Still, for products of the GeForce FX series, the same experts recommended using the Detonator 42.68 driver.

Figure 13.12: Incorrect operation of a driver

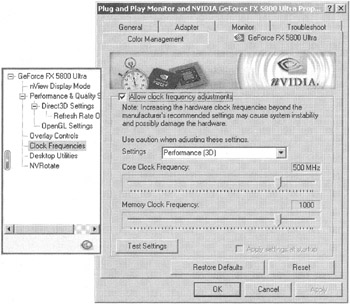

After installing the Detonator 42.68 driver, the video adapter settings, as usual, are placed on the appropriate tab of the Properties window. Most driver settings are standard; therefore, they do not present any special interest. However, some of them contain settings only for GeForce FX. This includes specifying the video adapter clock frequencies using driver functionality.

For Nvidia GeForce FX 5800 Ultra, the clock frequencies of the graphic engine and video memory can be specified separately for 2D and 3D modes. By default, adapter clock frequencies are 300/600 MHz in 2D applications and 500/1,000 MHz in 3D applications.

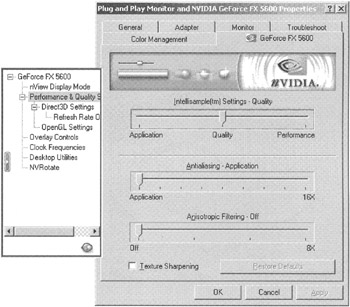

Another interesting tab contains the settings for working with the adapter and applications that use 3D functions.

Figure 13.13: GeForce FX 5800 Ultra video adapter

Figure 13.14: Setting GeForce FX 5800 Ultra clock frequencies using driver functionality

Figure 13.15: Setting GeForce FX 5800 Ultra operating modes via the driver

Here, you can choose from the following modes responsible for the speed-to-quality ratio: Application, Balanced, and Aggressive.

The Application mode ensures maximum quality. However, the best quality is achieved at the expense of performance. The Aggressive mode, on the contrary, ensures the strongest performance at the expense of decreased image quality.

In addition to these modes, it is possible to set full-screen smoothing (2x, Quincunx, 4x, and several modes available only with Direct3D — 4xS, 6xS, and 8xS) and anisotropic filtering (2x, 4x, and 8x).

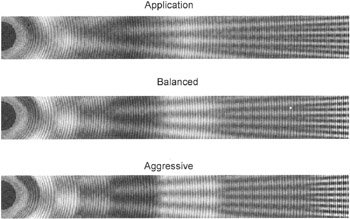

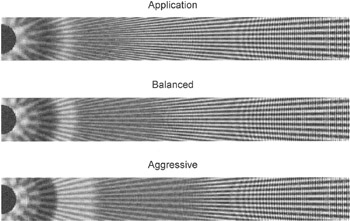

The influence of these modes on the quality of the display can be seen in Figs. 3.16–3.18.

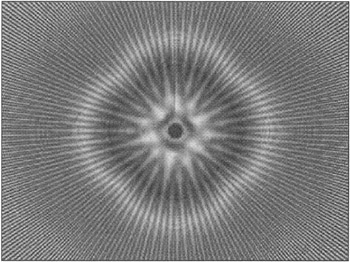

First, consider a small test program that displays a pyramid with a base coinciding to the screen surface and a distant vertex. The pyramid has numerous edges; therefore, it is perceived as a cone. The texture is applied to the side faces of the pyramid, MIP levels are colored, and trilinear filtering is used.

Figure 13.16: On-screen display of the test image

Now, consider this test image in different GeForce FX modes. Unfortunately, this illustration (256 shades of gray) cannot adequately illustrate the color transitions. (For a color version, see http://www.fcenter.ru/articles.shtml?videos/6101.) Still, the boundaries are distinct in the Aggressive mode, in contrast to smooth transitions between MIP levels in the Application mode.

With trilinear filtering, transitions in the Application mode are smoothened. This happens because two values from adjacent MIP levels participate in color creation. These two values are added to weight coefficients that depend on the pixel distance. This means that with trilinear filtration, halftones on the resulting image must smoothly and continuously change.

Figure 13.17: Dependence of the image quality on the mode, with trilinear filtering

Figure 13.18: Dependence of the image quality on the mode, with trilinear and anisotropic filtering

However, this behavior is only characteristic of the Application mode. When you choose the Balanced or Aggressive mode, smooth color transitions are no longer visible, and regions appear with boundaries between tones as well as with pure tones.

This means that in the Balanced and Aggressive modes, GeForce FX uses a combination of bilinear and trilinear filtering instead of the true trilinear filtering. In the Balanced mode, the portion of pixels that used true trilinear filtering is less than half; in the Aggressive mode, the portion is less than a quarter of the entire image area.

In addition to trilinear filtering, you can employ anisotropic filtering of the maximum level — 8x.

Besides the effect of using the mix of bilinear and trilinear filtering, the chip has decreased the detail level of textures in the Balanced and Aggressive modes by displacing the MIP levels closer to the observer.

This series of examples demonstrating the decrease of the image quality when changing the Application mode to the Balanced or Aggressive mode can be continued. However, these details go beyond the scope of this discussion, which concentrates on the capabilities of specialized software and its influence on the video adapter functionality.

Figure 13.19: Setting GeForce FX 5600 Ultra operating modes via the driver

By considering the entire range of video adapter drivers, it is necessary to point out that shortly after Detonator 42.68 became available, Detonator 43.45 was released. This new version of the driver has several differences from the 42.68 version.

First, new full-screen smoothing for Nvidia GeForce FX 5800 Ultra was introduced — 8cx and 16x. These modes employ super-sampling and also are available for Nvidia GeForce FX 5600 Ultra.

The second interesting aspect of Detonator 43.45 is the renaming of modes.

The best image quality that can be provided by the chips of the GeForce FX family is available in the Application mode, where all features aiming at performance optimization are disabled. The second position is held by the mode that provides lower quality but ensures higher performance. In pervious versions, it was named Balanced; it has been renamed Quality. Finally, there is the fastest mode, which provide the worst quality. This is the mode was previously known as Aggressive. In the newer version of the driver, the name of this mode has been changed to Performance.

It is not difficult to guess why Nvidia has renamed these modes without observing any logical rules. This approach is pure marketing. Most users of the driver, having seen the Quality mode in the settings window (the default setting), will believe this mode ensures the best quality. In reality, the video adapter won't ensure the best quality, which is only available in the Application mode. However, the video adapter will operate faster than if the Application mode was used.