Implementing High Availability

State Synchronization is a very important component for implementing High Availability. Without it, other firewalls that may be called in to take over will not know the current status of connections and will cause a break in connectivity. However, State Synchronization is not the only piece to this equation. Another component must be involved to allow other routers or hosts around the firewall to know which firewall to use at the time. Dynamic routing protocols can certainly be used to achieve this, but this is not always practical and comes with its own problems?namely, these protocols can create asymmetric routing.

All HA solutions for FireWall-1 provide for some sort of virtual IP address, which is shared between the firewalls. The active firewall at any given time possesses a number of these virtual addresses, at least one on each physical interface except for the synchronization interface (exactly how and when are specific to each implementation). This provides a virtual firewall. Clients and routers can simply point to this virtual address where appropriate and can always be assured that a firewall will service that address.

Asymmetric Routing

In asymmetric routing, packets on a connection take different paths coming into and going out of a given network?namely, your own network. Anytime packets have multiple paths to take through a network, there is always a possibility they will take a different path. If you observe how the Internet as a whole works, it is quite customary for sent packets to take a slightly different path from packets that are received.

Asymmetric routing is not a problem if that's all that's occurring?that packets are being routed and connection state is being not tracked. If connection state must be tracked (for instance, when you are trying to perform network security functions), asymmetric routing causes problems. Any HA solution must ensure that asymmetric routing does not occur or must have some way to deal with it when it does occur.

Consider the network diagram in Figure 13.5.

Figure 13.5. Sample network diagram with highly available firewalls

For 10.0.10.0/24, there are two possible routes to the Internet: mrhat and mrtwig. There are also two possible routes back to 10.0.10.0/24 from the Internet: mrhat and mrtwig. This means that it is possible to have an asymmetrical routing situation. For illustrative purposes, let's assume that mrhat is the default route for clients on the 10.0.10.0/24 network and that the Internet router routes all packets destined for 10.0.10.0/24 to mrtwig. This means there is an asymmetric routing condition. Let's also assume that both firewalls in question are synchronizing their state tables via Check Point's State Synchronization mechanism.

Connections originating from 10.0.10.0/24 going to the Internet may actually work fairly well. It works better the longer the latency is between sending the first packet through mrhat and receiving its reply through mrtwig. Hosts out on the Internet can easily take more than 155 milliseconds to respond.

Connections originating from the Internet and destined for 10.0.10.0/24 will be slow in establishing (if they do at all) because the latency between the time the packet is initially received on mrtwig, responded to by the client, and then sent out via mrhat will likely be less than 155 milliseconds (in the preceding situation, it would be less than 10 milliseconds). This means State Synchronization will not have had an opportunity to do its job. If the packet received on mrtwig is a SYN packet and the packet received on mrhat is a SYN/ACK packet (i.e., packets that start a TCP session), mrhat will see that the packet is not part of an established connection and will drop the packet. About 3 seconds later,[1] the server on the 10.0.10.0/24 network, not having received an ACK packet to acknowledge the SYN/ACK packet, will resend the SYN/ACK packet. By that time, synchronization will have occurred, and the packet will be allowed through. Note that with UDP or any IP datagrams, retransmissions occur only if the application using the packets does it, so it is quite possible that these packets will simply be dropped.

[1] This time period for resending a SYN/ACK response actually depends on the TCP/IP implementation. However, most TCP/IP stacks are based on BSD's TCP/IP stack, which has a default of 3 seconds.

The good news is that this problem has the potential to occur only within that 155 milliseconds or so right when the connection is being established. Once the connection is properly established and synchronized, an asymmetric condition is not a problem.

HA Solution Providers

You have several different choices of HA software and hardware products. The most common ones include those listed below.

Stonebeat's FullCluster for FireWall-1 (http://www.stonebeat.com): This software product runs on the same platform as FireWall-1. It provides hot-standby failover as well as failover with load sharing.

Rainfinity High Availability Software for Check Point VPN-1/FireWall-1 (http://www.rainfinity.com): Rainfinity delivers an accelerated HA platform for all gateway applications?from Internet connectivity to firewalls and content security?to ensure optimal performance across all network resources.

Check Point's ClusterXL (http://www.checkpoint.com): This software product is integrated into FireWall-1, although it requires a separate license. It provides hot-standby failover and load sharing.

Nokia IP Security Platforms (http://www.nokia.com/securitysolutions/): Nokia IP Security Platforms include support for VRRP, which provides failover capabilities to FireWall-1. Load balancing is not part of this solution but can easily be achieved with third-party hardware. Since IPSO 3.6, Nokia also provides patented IP Clustering technology, which adds the redundancy features of Dynamic Load Balancing and Active Session Failover for VPN traffic. Both of these features are included on the Nokia IP Security Platforms and do not require any extra Check Point licenses. Note that the IP30 and IP40 platforms also provide VRRP with the appropriate version of firmware (IP30 version 2.0 release and IP40 version 1.1, respectively).

Radware's Fireproof (http://www.radware.com): This hardware product provides hot-standby failover as well as failover with load sharing.

F5 Networks BIG-IP FireGuard (http://www.f5.com): This hardware product provides hot-standby failover as well as failover with load sharing.

From this list, you can see that there are two schools of thought: hardware-based solutions and software-based solutions. For High Availability, either solution works well, although software-based solutions do have somewhat of an edge because they can interact with FireWall-1 more directly. When load balancing is factored into the equation, hardware solutions quite simply scale better, although they tend to be somewhat more expensive. You can use both hardware and software HA systems together.

Any HA solution must address the problem of asymmetric routing. HA software vendors claim to be able to handle the asymmetric routing problem. However, I am skeptical of any software product's ability to handle this well. Assuming both firewalls are synchronized, there is simply no way to synchronize fast enough to be able to handle asymmetric connections in all situations. Packets can be held by some other process so that the synchronization process can catch up, or packets can be set up so that all firewalls hear the packets and only the right one responds, which seems to be a waste. Either latency or throughput is compromised in this situation. Specialized hardware can do this much faster than a general purpose platform can and is usually more scalable.

Load Balancing

When you have more than one firewall in parallel, the next logical question is, "How can I have each firewall take part in the overall network load to increase throughput?" Most people do not like the idea of purchasing extra equipment only to leave it sitting around unused most of the time.

Any effective load-balancing solution for FireWall-1 must handle packets for connections symmetrically (i.e., in and out through the same firewall), or at the very least, must be able to do this long enough for State Synchronization to catch up. The software approaches to load sharing exhibited by Stonesoft, Rainfinity, Check Point, and Nokia all share the same inherent design flaw: each firewall sees every packet destined for the cluster. Why is that? Because the virtual IP address used to represent the cluster is served via a multicast MAC address. Each cluster member will receive packets sent to this virtual MAC address and must do enough processing to determine whether or not the packet is one it needs to process.

What does this mean? It means that by adding a second firewall, you don't double your potential throughput. You do increase it, but only by about 50%. A third firewall might increase the throughput by about another 25%. In any case, the maximum available throughput will be the line speed by which a single firewall is connected (e.g., 100MB Ethernet).

The following subsections describe how Check Point and Nokia both implement their firewall and/or VPN load-balancing solutions. The subsections after those describe other load-balancing techniques you can employ.

ClusterXL

ClusterXL is Check Point's own method for providing load balancing. It is built into the core FireWall-1 product and uses the existing State Synchronization mechanism. In addition to being able to fail over all connections as in a standard HA configuration, ClusterXL also allows failover and load sharing of VPN connections. Up to five gateways are supported in a single cluster that uses ClusterXL.

ClusterXL is configured in SmartDashboard/Policy Editor. When a cluster is configured as having ClusterXL installed, a separate frame becomes available for ClusterXL-specific configuration options. In this frame you can configure exactly how the load will be shared with respect to VPN traffic.

ClusterXL is an extra-cost item, that is, it is not included in the base price of FireWall-1. It is available on all platforms on which FireWall-1 NG is available except for Nokia; however, ClusterXL for Nokia is planned for a future release.

Nokia IP Clustering

In 2000, Nokia acquired a company called Network Alchemy, which made VPN hardware that employed a clustering method able to scale extremely well. The products that originally employed this method are no longer being sold, but the clustering method has been integrated into the IPSO operating system since version 3.6.

As it is designed today, IP Clustering is designed primarily to load balance VPN connections. In the future, IP Clustering may load balance non-VPN connections as well. Up to four gateways can participate in an IP Cluster, with the most bang for the buck happening with three gateways.

IP Clustering is available as part of the IPSO operating system, that is, it is not necessary to purchase a license for it. IP Clustering is configured in the Voyager interface of IPSO. Check Point's State Synchronization mechanism is used in addition to IP Clustering's own synchronization protocol.

Static Load-Balancing Techniques

A cheap way to load balance is to logically group your hosts behind the firewall. This involves the following tasks.

Hosts behind your gateway need to be configured with a static default route to the proper firewall for their group. Each group should be on different logical networks or at least be grouped on a reasonable subnet boundary.

Border routers need to be configured with different routes to different firewalls.

If VRRP (or something similar) is used, there must be multiple virtual IP addresses (one for each group) that can be failed over. This will provide resilience in case one of the firewalls fails.

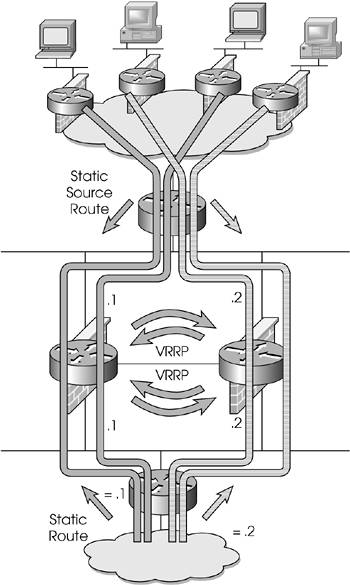

Figure 13.6 illustrates static load balancing in action.

Figure 13.6. Static load balancing

In Figure 13.6, the hosts on the bottom left use the .1 firewall as their default route, the hosts on the bottom right use the .2 firewall, and the border router has static routes for both sets of hosts back to their respective firewalls.

You can also do something similar with multiple site-to-site VPNs, although it requires an internal router capable of doing policy routing based on source addresses to ensure that packets go to the proper firewall. Figure 13.7 illustrates static load balancing with policy routing.

Figure 13.7. Static load balancing with VPN

The main issue with static load balancing is that load distribution is not automatic. If one part of your network starts consuming more bandwidth, you will have to manually adjust the load balancing. However, static load balancing is relatively inexpensive and straightforward to set up and maintain.

Load Balancing with Switches

Thus far, I have assumed a single inbound and outbound connection. Although NIC cards and hubs/switches are generally pretty reliable, from time to time these components fail. To provide more throughput and greater resilience, you should double the number of connections and switches, as shown in Figure 13.8. Each interface must be associated with a logically different subnet, which gives you the ability to use multiple switches.

Figure 13.8. Load balancing with switches

One advantage to this method is increased theoretical throughput. You now have two interfaces, which means you can potentially send twice as much data. Unfortunately, only the most powerful machines will be able to fully use both interfaces at maximum capacity. However, some modest performance gains can still be achieved, even without full utilization.

Dynamic Load Balancing

Dynamic load balancing is generally preferable to static load balancing because it usually provides a better load balance and is more accommodating of changes that occur in your network. However, extra care must be taken to ensure symmetry. Most load-balancing techniques use some sort of hash function that is nondeterministic. This creates asymmetric routing. Deterministic forwarding on both sides of the firewall ensures that the same path is taken in both directions. This also provides a nice way to load balance.

Deterministic load balancing can also be done with a hash. Most load balancers perform an XOR of the source and destination IP addresses. This produces a symmetric result with packets going from host A to host B or host B to host A, as shown in Figure 13.9.

Assuming only two paths (i.e., two parallel firewalls), you simply look at the last bit in the XOR. A 0 means pass the packet to one firewall; a 1 means send it to the other firewall. Using hashing only, you are limited to the number of firewalls that are powers of 2 (2, 4, 8, 16, and so on). However, most systems enhance the hashing mechanism to support any number of firewalls and include methods that check the chosen path before using it and require their own sort of state synchronization.

Figure 13.9. XOR of incoming and outgoing packets by IP

Incoming Source IP 172.16.64.1 10101100.00010000.01000000.00000001 Destination IP 192.168.30.65 11000000.10101000.00011110.01000001 XOR 108 184 94 64 01101100 10111000 01011110 01000000 Outgoing Source IP 192.168.30.65 11000000.10101000.00011110.01000001 Destination IP 172.16.64.1 10101100.00010000.01000000.00000001 XOR 108 184 94 64 01101100 10111000 01011110 01000000

Because of these accessible techniques, you can now build a fully meshed solution with resilient load balancers and firewalls. Many different products can perform this function, including products from Nortel Networks, Cisco, Cabletron, F5, Foundry, Radware, and TopLayer. Once the connection is established and state synchronization takes place, a failure can occur in any component, and the connections will continue to flow (see Figure 13.10).

Figure 13.10. Dynamic load balancing

Network Address Translation

NAT makes load balancing much more complex, mainly because packets look different inside and outside the firewall. However, if only the source or destination IP address is subject to NAT, you can still use a hash function to accomplish load balancing by hashing against the IP address that does not change.

Consider Figure 13.11. The machine with IP address 192.168.32.2 is accessed via 128.0.0.24 on the Internet by means of NAT. If 192.168.32.2 initiates a connection to the Internet, the destination IP address will not change. Conversely, if something initiates a connection to 128.0.0.24, the source address will not change.

Figure 13.11. Dynamic load balancing with NAT

Therefore, on the outside routers, you can perform a hash based on the source IP address. On the inside routers, you can perform a hash based on the destination IP address. Both of these hashes should return the same value, thus allowing you to forward all traffic through a particular firewall. This technique will not work with dual NAT (i.e., where source and destination are both translated) or in a VPN. The next subsection discusses load balancing with VPNs.

Site-to-Site VPNs

The problem you have with a VPN is that, from the outside world, the packets look like they are coming from host A and going to host B, even though inside the packet it may be host X going to host Y. This is because the packets are encapsulated and encrypted. Encrypted packets have zero correlation to their unencrypted counterparts, so none of the hashing techniques that have been discussed thus far will handle them.

A system that will map a particular application or connection to a next hop Ethernet address is required. This can be done with a fairly sophisticated hash table that stores where to forward a packet. An example of this might be:

(hash key: [srcIP: ingress if, ingress MAC],

[dstIP: egress if, egress MAC])

When a packet arrives at the load balancer, a symmetric hash is performed, usually on layer 3 and 4 information. You then check to see if there is a match in the table based on the hash value and the source IP address. You check the hash for a match, and you check to see if either the source or destination IP address in the table entry matches the source address of the received packet. If there is a match, you forward it to the appropriate MAC address based on the source IP address of the packet and refresh the table entry. If there is no match, you figure out the ingress and egress interfaces and MAC address, create a new table entry, and forward accordingly. Figure 13.12 shows a flowchart of this process.

Figure 13.12. Flowchart of dynamic load balancing with VPNs

For example, assume you have a situation similar to the one shown in Figure 13.10 except you are using load balancers that implement the technique discussed in this section. Let's follow an IPSec-encrypted TCP SYN packet as it enters the network from the Internet and the SYN/ACK reply as it exits. Let's call the load balancers on the outside of the firewalls elb1 and elb2, the two firewalls A and B, the two firewalls' virtual IP address C (which is also the gateway cluster address), and the load balancers inside the firewalls ilb1 and ilb2.

The IPSec packet has a source IP address of F and a destination IP address of C. It is received at elb1 via interface elb1-1. The packet it hashed to value x and elb1 determines that no flow entry exists. The next hop for this packet is determined to be firewall A out interface elb1-3. An entry is added to the table that looks like this:

(hash x, F [elb1-1, upstream router MAC],

C [elb1-3, firewall-A's external MAC])

Firewall A receives the packet, accepts the packet, decrypts it, and forwards it to ilb1. The packet now has a source IP address of P and a destination IP address of Q. ilb1 hashes the packet to value y and determines that no flow entry exists. The next hop for this packet is determined to be an internal router out interface elb1-4. The packet was received on elb1-1. An entry is added to the table, which looks like this:

(hash y, P [elb1-1, Firewall-A's internal MAC],

Q [elb1-4, downstream router MAC])

When the SYN/ACK packet comes back to elb1, it has a source IP of Q and a destination IP of P. ilb1 hashes the packet to value y (remember, the hash function is symmetric). An entry for this hash exists in the table. Because Q matches the "destination" IP address of this entry (it is a reply, so the source/destination are reversed), the packet is forwarded out interface elb1-1 to firewall A's internal MAC address. Firewall A receives the packet, accepts it, encrypts it, and forwards it out to elb1, where a similar process takes place.

This process is beneficial because it is interface independent. The forwarding is unit independent, thus NAT and VPNs will work. This approach is particularly clever because the forwarding technique is deterministic?it does not matter that the switch does not know of a flow. Obviously the hash table has to be aged. It can be viewed as a sophisticated ARP table.

Products by Foundry and F5 support load balancing as described in this section.