Processing Large XML Documents

Processing Large XML Documents

As you have seen, there are often many different techniques to accomplish the same task with XML. In many cases you can choose any solution with the goal of writing less and more maintainable code; but when you need to process a large number of XML documents or very large XML documents, you must consider efficiency.

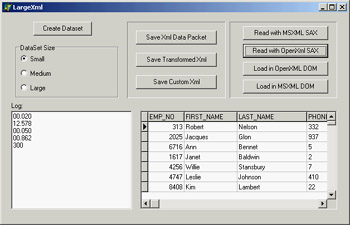

Discussing theory by itself is not terribly useful, so I've built an example you can use (and modify) to test different solutions. The example is called LargeXml, and it covers a specific area: moving data from a database to an XML file and back. The example can open a dataset (using dbExpress) and then replicate the data many times in a ClientDataSet in memory. The structure of the in-memory ClientDataSet is cloned from that of the data access component:

SimpleDataSet1.Open; ClientDataSet1.FieldDefs := SimpleDataSet1.FieldDefs; ClientDataSet1.CreateDataSet;

After using a radio group to determine the amount of data you want to process (some options require minutes on a slow computer), the data is cloned with this code:

while ClientDataSet1.RecordCount < nCount do

begin

SimpleDataSet1.RecNo := Random (SimpleDataSet1.RecordCount) + 1;

ClientDataSet1.Insert;

ClientDataSet1.Fields [0].AsInteger := Random (10000);

for I := 1 to SimpleDataSet1.FieldCount - 1 do

ClientDataSet1.Fields [i].AsString := SimpleDataSet1.Fields [i].AsString;

ClientDataSet1.Post;

end;

From a ClientDataSet to an XML Document

Now that the program has a (large) dataset in memory, it provides three different ways to save the dataset to a file. The first is to save the XMLData of the ClientDataSet directly to a file, obtaining an attribute-based document. This is probably not the format you want, so the second solution is to apply an XmlMapper transformation with an XMLTransformClient component. The third solution involves processing the dataset directly and writing out each record to a file:

procedure TForm1.btnSaveCustomClick(Sender: TObject);

var

str: TFileStream;

s: string;

i: Integer;

begin

str := TFileStream.Create ('data3.xml', fmCreate);

try

ClientDataSet1.First;

s := '<?xml version="1.0" standalone="yes" ?><employee>';

str.Write(s[1], Length (s));

while not ClientDataSet1.EOF do

begin

s := '';

for i := 0 to ClientDataSet1.FieldCount - 1 do

s := s + MakeXmlstr (ClientDataSet1.Fields[i].FieldName,

ClientDataSet1.Fields[i].AsString);

s := MakeXmlStr ('employeeData', s);

str.Write(s[1], length (s));

ClientDataSet1.Next

end;

s := '</employee>';

str.Write(s[1], length (s));

finally

str.Free;

end;

end;

This code uses a simple (but effective) support function to create XML nodes:

function MakeXmlstr (node, value: string): string; begin Result := '<' + node + '>' + value + '</' + node + '>'; end;

If you run the program, you can see the time taken by each operation, as shown in Figure 22.13. Saving the ClientDataSet data is the fastest approach, but you probably don't get the result you want. Custom streaming is only slightly slower; but you should consider that this code doesn't require you to first move the data to a ClientDataSet, because you can apply it directly even to a unidirectional dbExpress dataset. You should forget using the code based on the XmlMapper for a large dataset, because it is hundreds of times slower, even for a small dataset (I haven't been able to try a large dataset, because the process takes too long). For example, the 50 milliseconds required by custom streaming for a small dataset become more than 10 seconds when I use the mapping, and the result is very similar.

Figure 22.13: The LargeXml example in action

Figure 22.13: The LargeXml example in action

From an XML Document to a ClientDataSet

Once you have a large XML document, obtained by a program (as in this case) or from an external source, you need to process it. As you have seen, XmlMapper support is far too slow, so you are left with three alternatives: an XSL transformation, a SAX, or a DOM. XSL trans-formations will probably be fast enough, but in this example I've opened the document with a SAX; it's the fastest approach and doesn't require much code. The program can also load a document in a DOM, but I haven't written the code to navigate the DOM and save the data back to a ClientDataSet.

In both cases, I've tested the OpenXml engine versus the MSXML DOM. This allows you to see the two SAX solutions compared, because (unluckily) the code is slightly different. I can summarize the results here: Using the MSXML SAX is slightly faster than using the OpenXml SAX (the difference is about 20 percent), whereas loading in the DOM marks a large advantage in favor of MSXML.

The MSXML SAX code uses the same architecture discussed in the SaxDemo1 example, so here I've listed only the code of the handlers you use. As you can see, at the beginning of an employeeData element you insert a new record, which is posted when the same node is closed. Lower-level nodes are added as fields of the current record. Here is the code:

procedure TMyDataSaxHandler.startElement(var strNamespaceURI, strLocalName,

strQName: WideString; const oAttributes: IVBSAXAttributes);

begin

inherited;

if strLocalName = 'employeeData' then

Form1.clientdataset2.Insert;

strCurrent := '';

end;

procedure TMyDataSaxHandler.characters(var strChars: WideString);

begin

inherited;

strCurrent := strCurrent + RemoveWhites(strChars);

end;

procedure TMyDataSaxHandler.endElement(var strNamespaceURI, strLocalName,

strQName: WideString);

begin

if strLocalName = 'employeeData' then

Form1.clientdataset2.Post;

if stack.Count > 2 then

Form1.ClientDataSet2.FieldByName (strLocalName).AsString := strCurrent;

inherited;

end;

The code for the event handlers in the OpenXml version is similar. All that changes are the interface of the methods and the names of the parameters:

type

TDataSaxHandler = class (TXmlStandardHandler)

protected

stack: TStringList;

strCurrent: string;

public

constructor Create(aowner: TComponent); override;

function endElement(const sender: TXmlCustomProcessorAgent;

const locator: TdomStandardLocator;

namespaceURI, tagName: wideString): TXmlParserError; override;

function PCDATA(const sender: TXmlCustomProcessorAgent;

const locator: TdomStandardLocator; data: wideString):

TXmlParserError; override;

function startElement(const sender: TXmlCustomProcessorAgent;

const locator: TdomStandardLocator; namespaceURI, tagName: wideString;

attributes: TdomNameValueList): TXmlParserError; override;

destructor Destroy; override;

end;

It is also more difficult to invoke the SAX engine, as shown in the following code (from which I've removed the code for the creation of the dataset, the timing, and the logging):

procedure TForm1.btnReadSaxOpenClick(Sender: TObject);

var

agent: TXmlStandardProcessorAgent;

reader: TXmlStandardDocReader;

filename: string;

begin

Log := memoLog.Lines;

filename := ExtractFilePath (Application.Exename) + 'data3.xml';

agent := TXmlStandardProcessorAgent.Create(nil);

reader:= TXmlStandardDocReader.Create (nil);

try

reader.NextHandler := TDataSaxHandler.Create (nil); // our custom class

agent.reader := reader;

agent.processFile(filename, filename);

finally

agent.free;

reader.free;

end;

end;